NISTIR 8280

Face Recognition Vendor Test (FRVT)

Part 3: Demographic Effects

Patrick Grother

Mei Ngan

Kayee Hanaoka

This publication is available free of charge from:

https://doi.org/10.6028/NIST.IR.8280

NISTIR 8280

Face Recognition Vendor Test (FRVT)

Part 3: Demographic Effects

Patrick Grother

Mei Ngan

Kayee Hanaoka

Information Access Division

Information Technology Laboratory

This publication is available free of charge from:

https://doi.org/10.6028/NIST.IR.8280

December 2019

U.S. Department of Commerce

Wilbur L. Ross, Jr., Secretary

National Institute of Standards and Technology

Walter Copan, NIST Director and Undersecretary of Commerce for Standards and Technology

Certain commercial entities, equipment, or materials may be identified in this

document in order to describe an experimental procedure or concept adequately.

Such identification is not intended to imply recommendation or endorsement by the

National Institute of Standards and Technology, nor is it intended to imply that the

entities, materials, or equipment are necessarily the best available for the purpose.

National Institute of Standards and Technology Interagency or Internal Report 8280

Natl. Inst. Stand. Technol. Interag. Intern. Rep. 8280, 81 pages (December 2019)

This publication is available free of charge from:

https://doi.org/10.6028/NIST.IR.8280

This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8280

2019/12/19 08:14:00 FRVT - FACE RECOGNITION VENDOR TEST - DEMOGRAPHICS 1

EXECUTIVE SUMMARY

OVERVIEW This is the third in a series of reports on ongoing face recognition vendor tests (FRVT) ex-

ecuted by the National Institute of Standards and Technology (NIST). The first two reports

cover, respectively, the performance of one-to-one face recognition algorithms used for ver-

ification of asserted identities, and performance of one-to-many face recognition algorithms

used for identification of individuals in photo data bases. This document extends those eval-

uations to document accuracy variations across demographic groups.

MOTIVATION The recent expansion in the availability, capability, and use of face recognition has been ac-

companied by assertions that demographic dependencies could lead to accuracy variations

and potential bias. A report from Georgetown University [14] work noted that prior stud-

ies [22], articulated sources of bias, described the potential impacts particularly in a policing

context, and discussed policy and regulatory implications. Additionally, this work is moti-

vated by studies of demographic effects in more recent face recognition [9,16, 23] and gender

estimation algorithms [5, 36].

AIMS AND

SCOPE

NIST has conducted tests to quantify demographic differences in contemporary face recog-

nition algorithms. This report provides details about the recognition process, notes where

demographic effects could occur, details specific performance metrics and analyses, gives

empirical results, and recommends research into the mitigation of performance deficiencies.

NIST intends this report to inform discussion and decisions about the accuracy, utility, and

limitations of face recognition technologies. Its intended audience includes policy makers,

face recognition algorithm developers, systems integrators, and managers of face recognition

systems concerned with mitigation of risks implied by demographic differentials.

WHAT WE DID The NIST Information Technology Laboratory (ITL) quantified the accuracy of face recogni-

tion algorithms for demographic groups defined by sex, age, and race or country of birth.

We used both one-to-one verification algorithms and one-to-many identification search algo-

rithms. These were submitted to the FRVT by corporate research and development laborato-

ries and a few universities. As prototypes, these algorithms were not necessarily available as

mature integrable products. Their performance is detailed in FRVT reports [16,17].

We used these algorithms with four large datasets of photographs collected in U.S. govern-

mental applications that are currently in operation:

Domestic mugshots collected in the United States.

Application photographs from a global population of applicants for immigration benefits.

Visa photographs submitted in support of visa applicants.

Border crossing photographs of travelers entering the United States.

All four datasets were collected for authorized travel, immigration or law enforcement pro-

cesses. The first three sets have good compliance with image capture standards. The last set

does not, given constraints on capture duration and environment. Together these datasets al-

lowed us to process a total of 18.27 million images of 8.49 million people through 189 mostly

commercial algorithms from 99 developers.

Links:

EXEC. SUMMARY

TECH. SUMMARY

False positive: Incorrect association of two subjects 1:1 FMR 1:N FPIR

False negative: Failed association of one subject 1:1 FNMR 1:N FNIR

T 0 → FMR, FPIR → 0

→ FNMR, FNIR → 1

This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8280

2019/12/19 08:14:00 FRVT - FACE RECOGNITION VENDOR TEST - DEMOGRAPHICS 2

The datasets were accompanied by sex and age metadata for the photographed individuals.

The mugshots have metadata for race, but the other sets only have country-of-birth informa-

tion. We restrict the analysis to 24 countries in 7 distinct global regions that have seen lower

levels of long-distance immigration. While country-of-birth information may be a reasonable

proxy for race in these countries, it stands as a meaningful factor in its own right particularly

for travel-related applications of face recognition.

The tests aimed to determine whether, and to what degree, face recognition algorithms dif-

fered when they processed photographs of individuals from various demographics. We as-

sessed accuracy by demographic group and report on false negative and false positive ef-

fects. False negatives are the failure to associate one person in two images; they occur when

the similarity between two photos is low, reflecting either some change in the person’s ap-

pearance or in the image properties. False positives are the erroneous association of samples

of two persons; they occur when the digitized faces of two people are similar.

In background material that follows we give examples of how algorithms are used, and we

elaborate on the consequences of errors noting that the impacts of demographic differentials

can be advantageous or disadvantageous depending on the application.

WHAT WE

FOUND

The accuracy of algorithms used in this report has been documented in recent FRVT eval-

uation reports [16, 17]. These show a wide range in accuracy across developers, with the

most accurate algorithms producing many fewer errors. These algorithms can therefore be

expected to have smaller demographic differentials.

Contemporary face recognition algorithms exhibit demographic differentials of various mag-

nitudes. Our main result is that false positive differentials are much larger than those related

to false negatives and exist broadly, across many, but not all, algorithms tested. Across demo-

graphics, false positives rates often vary by factors of 10 to beyond 100 times. False negatives

tend to be more algorithm-specific, and vary often by factors below 3.

False positives: Using the higher quality Application photos, false positive rates are high-

est in West and East African and East Asian people, and lowest in Eastern European in-

dividuals. This effect is generally large, with a factor of 100 more false positives between

countries. However, with a number of algorithms developed in China this effect is re-

versed, with low false positive rates on East Asian faces. With domestic law enforcement

images, the highest false positives are in American Indians, with elevated rates in African

American and Asian populations; the relative ordering depends on sex and varies with

algorithm.

We found false positives to be higher in women than men, and this is consistent across

algorithms and datasets. This effect is smaller than that due to race.

We found elevated false positives in the elderly and in children; the effects were larger in

the oldest and youngest, and smallest in middle-aged adults.

Links:

EXEC. SUMMARY

TECH. SUMMARY

False positive: Incorrect association of two subjects 1:1 FMR 1:N FPIR

False negative: Failed association of one subject 1:1 FNMR 1:N FNIR

T 0 → FMR, FPIR → 0

→ FNMR, FNIR → 1

This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8280

2019/12/19 08:14:00 FRVT - FACE RECOGNITION VENDOR TEST - DEMOGRAPHICS 3

False negatives: With domestic mugshots, false negatives are higher in Asian and Ameri-

can Indian individuals, with error rates above those in white and African American faces

(which yield the lowest false negative rates). However, with lower-quality border crossing

images, false negatives are generally higher in people born in Africa and the Caribbean,

the effect being stronger in older individuals. These differing results relate to image qual-

ity: The mugshots were collected with a photographic setup specifically standardized to

produce high-quality images across races; the border crossing images deviate from face

image quality standards.

In cooperative access control applications, false negatives can be remedied by users making

second attempts.

The presence of an enrollment database affords one-to-many identification algorithms a re-

source for mitigation of demographic effects that purely one-to-one verification systems do

not have. Nevertheless, demographic differentials present in one-to-one verification algo-

rithms are usually, but not always, present in one-to-many search algorithms. One impor-

tant exception is that some developers supplied highly accurate identification algorithms for

which false positive differentials are undetectable.

More detailed results are introduced in the Technical Summary.

IMPLICATIONS

OF THESE

TESTS

Operational implementations usually employ a single face recognition algorithm. Given

algorithm-specific variation, it is incumbent upon the system owner to know their algo-

rithm. While publicly available test data from NIST and elsewhere can inform owners, it

will usually be informative to specifically measure accuracy of the operational algorithm on

the operational image data, perhaps employing a biometrics testing laboratory to assist.

Since different algorithms perform better or worse in processing images of individuals in

various demographics, policy makers, face recognition system developers, and end users

should be aware of these differences and use them to make decisions and to improve future

performance. We supplement this report with more than 1200 pages of charts contained in

seventeen annexes that include exhaustive reporting of results for each algorithm. These are

intended to show the breadth of the effects, and to inform the algorithm developers.

There are a variety of techniques that might mitigate performance limitations of face recogni-

tion systems performance issues overall and specifically those that relate to demographics.

This report includes recommendations for research in developing and evaluating the value,

costs, and benefits of potential mitigation techniques - see sections 8 and 9.

Reporting of demographic effects often has been incomplete in academic papers and in me-

dia coverage. In particular, accuracy is discussed without stating the quantity of interest be

it false negatives, false positives or failure to enroll. As most systems are configured with a

fixed threshold, it is necessary to report both false negative and false positive rates for each

demographic group at that threshold. This is rarely done - most reports are concerned only

with false negatives. We make suggestions for augmenting reporting with respect to demo-

graphic difference and effects.

Links:

EXEC. SUMMARY

TECH. SUMMARY

False positive: Incorrect association of two subjects 1:1 FMR 1:N FPIR

False negative: Failed association of one subject 1:1 FNMR 1:N FNIR

T 0 → FMR, FPIR → 0

→ FNMR, FNIR → 1

This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8280

2019/12/19 08:14:00 FRVT - FACE RECOGNITION VENDOR TEST - DEMOGRAPHICS 4

BACKGROUND: ALGORITHMS, ERRORS, IMPACTS

FACE

ANALYSIS:

CLASSIFICA-

TION,

ESTIMATION,

RECOGNITION

Before presenting results in the Technical Summary we describe what face recognition is,

contrasting it with other applications that analyze faces, and then detail the errors that are

possible in face verification and identification and their impacts.

Much of the discussion of face recognition bias in recent years cites two studies [5, 36] show-

ing poor accuracy of face gender classification algorithms on black women. Those studies

did not evaluate face recognition algorithms, yet the results have been widely cited to indict

their accuracy. Our work was undertaken to quantify analogous effects in face recognition

algorithms. We strongly recommend that reporting of bias should include information about

the class of algorithm evaluated. We use the term face analysis as an umbrella for any al-

gorithm that consumes face images and produces some output. Within that are estimation

algorithms that output some continuous quantity (e.g., age or degree of fatigue). There are

classification algorithms that aim to determine some categorical quantity such as the sex of a

person or their emotional state. Face classification algorithms are built with inherent knowl-

edge of the classes they aim to produce (e.g., happy, sad). Face recognition algorithms, how-

ever, have no built-in notion of a particular person. They are not built to identify particular

people; instead they include a face detector followed by a feature extraction algorithm that

converts one or more images of a person into a vector of values that relate to the identity

of the person. The extractor typically consists of a neural network that has been trained on

ID-labeled images available to the developer. In operations, they act as generic extractors

of identity-related information from photos of persons they have usually never seen before.

Recognition proceeds as a differential operator: Algorithms compare two feature vectors and

emit a similarity score. This is a vendor-defined numeric value expressing how similar the

parent faces are. It is compared to a threshold value to decide whether two samples are from,

or represent, the same person or not. Thus, recognition is mediated by persistent identity

information stored in a feature vector (or “template”). Classification and estimation, on the

other hand, are single-shot operations from one sample alone, employing machinery that is

different from that used for face recognition.

VERIFICATION Errors: A comparison of images from the same person yields a genuine or “mate” score. A

comparison of images from different people yields an imposter or “nonmate” score. Ideally,

nonmate scores should be low and mate scores should be high. In practice, some imposter

scores are above a numeric threshold giving false positives, and some genuine comparisons

yield scores below threshold giving false negatives.

Applications: One-to-one verification is used in applications including logical access to a

phone or physical access through a security check point. It also supports non-repudiation

e.g. to authorize the dispensing of a prescription drug. Two photos are involved: one in

the database that is compared with one taken of the person seeking access to answer the

question: ”Is this the same person or not?”

Impact of errors: Errors have different implications for the system owner and for the in-

dividual whose photograph is being used, depending upon the application. In verification

applications, false negatives cause inconvenience for the user. For example, an individual

may not be able to get into their phone or they are delayed entering a facility or crossing a

border. These errors can usually be remediated with a second attempt. False positives, on the

other hand, present a security concern to the system owner, as they allow access to imposters.

Links:

EXEC. SUMMARY

TECH. SUMMARY

False positive: Incorrect association of two subjects 1:1 FMR 1:N FPIR

False negative: Failed association of one subject 1:1 FNMR 1:N FNIR

T 0 → FMR, FPIR → 0

→ FNMR, FNIR → 1

This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8280

2019/12/19 08:14:00 FRVT - FACE RECOGNITION VENDOR TEST - DEMOGRAPHICS 5

IDENT-

IFICATION

Identification algorithms, referred to commonly as one-to-many or “1-to-N” search algo-

rithms, notionally compare features extracted from a search “probe” image with all feature

vectors previously enrolled from “gallery” images. The algorithms return either a fixed num-

ber of the most similar candidates, or only those that are above a preset threshold. A candi-

date is an index and a similarity score. Some algorithms execute an exhaustive search of all

N enrollments and a sort operation to yield the most similar. Other algorithms implement

“fast-search” techniques [2,19,21,26] that avoid many of the N comparisons and are therefore

highly economical [17].

Identification applications: There are two broad uses of identification algorithms. First, they

can be used to facilitate positive access like in one-to-one verification but without presenta-

tion of an identity claim. For example, a subject is given access to a building solely on the

basis of presentation a photograph that matches any enrolled identity with a score above

threshold. Second, they can be used for so-called negative identification where the system

operator claims implicitly that searched individuals are not enrolled - for example, checking

databases of gamblers previously banned from a casino.

Impacts: As with verification, the impact of a demographic differential will depend on the

application. In one-to-many searches, false positives primarily occur when a search of a

subject who is not present in the database yields a candidate identity for human review. This

type of “one to many” search is often employed to check for a person who might be applying

for a visa or driver’s license under a name different than their own. False positives may

also occur when a search of someone who is enrolled produces the wrong identity with, or

instead of, the correct identity. Identification algorithms produce such outcomes when the

search yields a comparison score above a chosen threshold.

In identification applications such as visa or passport fraud detection, or surveillance, a false

positive match to another individual could lead to a false accusation, detention or deporta-

tion. Higher false negatives would be an advantage to an enrollee in such a system, as their

fraud would go undetected, and a disadvantage to the system owner whose security goals

will be undermined.

Investigation: This is a special-case application of identification algorithms where the thresh-

old is set to zero so that all searches will produce a fixed number of candidates. In such

cases, the false positive identification rate is 100% because any search of someone not in the

database will still yield candidates. Algorithms used in this way are part of a hybrid machine-

human system: The algorithm offers up candidates for human adjudication, for which labor

must be available. In such cases, false positive differentials from the algorithm are immaterial

- the machine returns say 50 candidates regardless. What matters then is the human response,

and the evidence there is for both poor [10, 42] and varied human capability, even without

time constraints [34], and sex and race performance differentials, particularly an interaction

between the reviewer’s demographics with those of the photographs under review [7]. The

interaction of machine and human is beyond the scope of this report, as is human efficacy.

Links:

EXEC. SUMMARY

TECH. SUMMARY

False positive: Incorrect association of two subjects 1:1 FMR 1:N FPIR

False negative: Failed association of one subject 1:1 FNMR 1:N FNIR

T 0 → FMR, FPIR → 0

→ FNMR, FNIR → 1

This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8280

2019/12/19 08:14:00 FRVT - FACE RECOGNITION VENDOR TEST - DEMOGRAPHICS 6

TECHNICAL SUMMARY

This section summarizes the results of the study. This is preceded by an introduction to terminology and

discussion of a vital aspect in reporting demographic effects, namely that it is necessary to report both false

negative and false positive error rates.

ACCURACY

DIFFERENTIALS

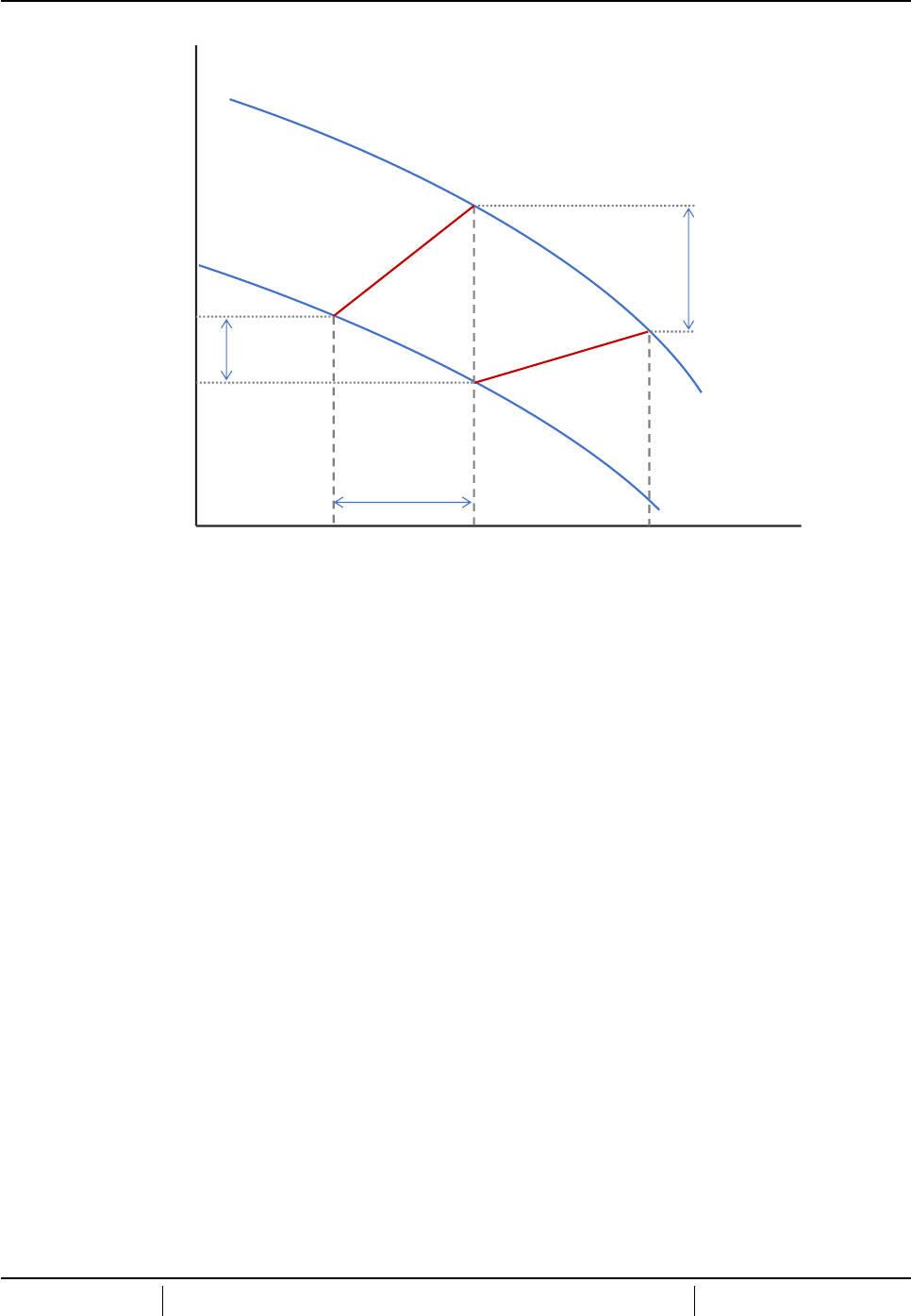

When similarity scores are computed over a collection of images from demographic A (say

elderly Asian men) they may be higher than from demographic B (say young Asian women).

We adopt terminology from a Department of Homeland Security Science and Technology

Directorate article [20] and define differential performance as a “difference in the genuine

or imposter [score] distributions”. Such differentials are inconsequential unless they prompt

a differential outcome. An outcome occurs when a score is compared with an operator-

defined threshold. A genuine score below threshold yields a false negative outcome, and an

imposter score at or above threshold, a false positive outcome. The subject of this report is to

quantify differential outcomes between demographics. The term demographic differential is

inherited from an ISO technical report [6] now under development.

FIXED

THRESHOLD

OPERATION

A crucial point in reasoning about differentials is that the vast majority of biometric sys-

tems are configured with a fixed threshold against which all comparisons are made (i.e., the

threshold is not tailored to cameras, environmental conditions or, particularly, demograph-

ics). Most academic studies ignore this point (even in demographics e.g., [13]) by reporting

false negative rates at fixed false positive rates rather than at fixed thresholds, thereby hiding

excursions in false positive rates and misstating false negative rates. This report includes

documentation of demographic differentials about typical operating thresholds.

We report false positive and false negative rates separately because the consequences of each

type of error are of importance to different communities. For example, in a one-to-one access

control, false negatives inconvenience legitimate users; false positives undermine a system

owners security goals. On the other hand, in a one-to-many deportee detection application,

a false negative would present a security problem, and a false positive would flag legitimate

visitors. The prior probability of imposters in each case is important. For example, in some

access control cases, imposters almost never attempt access and the only germane error rate

is the false negative rate.

RESULTS

OVERVIEW

We found empirical evidence for the existence of demographic differentials in the majority of

contemporary face recognition algorithms that we evaluated. The false positive differentials

are much larger than those related to false negatives. False positive rates often vary by one

or two orders of magnitude (i.e., 10x, 100x). False negative effects vary by factors usually

much less than 3. The false positive differentials exist broadly, across many, but not all, algo-

rithms. The false negatives tend to be more algorithm-specific. Research toward mitigation

of differentials is discussed in sections 9 and 8.

The accuracy of algorithms used in this report has been documented in recent FRVT eval-

uation reports [16, 17]. These show a wide range in accuracy across algorithm developers,

with the most accurate algorithms producing many fewer errors than lower-performing vari-

ants. More accurate algorithms produce fewer errors, and will be expected therefore to have

smaller demographic differentials.

Links:

EXEC. SUMMARY

TECH. SUMMARY

False positive: Incorrect association of two subjects 1:1 FMR 1:N FPIR

False negative: Failed association of one subject 1:1 FNMR 1:N FNIR

T 0 → FMR, FPIR → 0

→ FNMR, FNIR → 1

This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8280

2019/12/19 08:14:00 FRVT - FACE RECOGNITION VENDOR TEST - DEMOGRAPHICS 7

FALSE

NEGATIVES

With regard to false negative demographic differentials we make the observations below.

Note that in real-time cooperative applications, false negatives can often be remedied by

making second attempts.

False negative error rates vary strongly by algorithm, from below 0.5% to above 10%. For

the more accurate algorithms, false negative rates are usually low with average demo-

graphic differentials being, necessarily, smaller still. This is an important result: use of

inaccurate algorithms will increase the magnitude of false negative differentials. See Fig-

ure 22 and Annex 12 .

In domestic mugshots, false negatives are higher in Asian and American Indian individu-

als, with error rates above those in white and black faces. The lowest false negative rates

occur in black faces. This result might not be related to race - it could arise due to differ-

ences in the time elapsed between photographs because ageing is highly influential on face

recognition false negatives. We will report on that analysis going forward. See Figure 17.

False negative error rates are often higher in women and in younger individuals, partic-

ularly in the mugshot images. There are many exceptions to this, so universal statements

pertaining to algorithms false negative rates across sex and age are not supported.

When comparing high-quality application photos, error rates are very low and measure-

ment of false negative differentials across demographics is difficult. This implies that better

image quality reduces false negative rates and differentials. See Figure 22.

When comparing high-quality application images with lower-quality border crossing im-

ages, false negative rates are higher than when comparing the application photos. False

negative rates are often higher in recognition of women, but the differentials are smaller

and not consistent. See Figure 21.

In the border crossing images, false negatives are generally higher in individuals born in

Africa and the Caribbean, the effect being stronger in older individuals. See Figure 18.

FALSE

POSITIVES

Verification Algorithms: With regard to false positive demographic differentials we make

the following observations.

We found false positives to be between 2 and 5 times higher in women than men, the

multiple varying with algorithm, country of origin and age. This increase is present for

most algorithms and datasets. See Figure 6.

With respect to race, false positive rates are highest in West and East African and East

Asian people (but with exceptions noted next). False positive rates are also elevated but

slightly less so in South Asian and Central American people. The lowest false positive

rates generally occur with East European individuals. See Figure 5.

A number of algorithms developed in China give low false positive rates on East Asian

faces, and sometimes these are lower than those with Caucasian faces. This observation -

that the location of the developer as a proxy for the race demographics of the data they used

in training - matters was noted in 2011 [33], and is potentially important to the reduction

of demographic differentials due to race and national origin.

Links:

EXEC. SUMMARY

TECH. SUMMARY

False positive: Incorrect association of two subjects 1:1 FMR 1:N FPIR

False negative: Failed association of one subject 1:1 FNMR 1:N FNIR

T 0 → FMR, FPIR → 0

→ FNMR, FNIR → 1

This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8280

2019/12/19 08:14:00 FRVT - FACE RECOGNITION VENDOR TEST - DEMOGRAPHICS 8

We found elevated false positives in the elderly and in children; the effects were larger in

the oldest adults and youngest children, and smallest in middle aged adults. The effects

are consistent across country-of-birth, datasets and algorithms but vary in magnitude. See

Figure 14 and Figure 15.

With mugshot images, the highest false positives are in American Indians, with elevated

rates in African American and Asian populations; the relative ordering depends on sex

and varies with algorithm. See Figure 12 and Figure 13.

Identification Algorithms: The presence of an enrollment database affords one-to-many al-

gorithms a resource for mitigation of demographic effects that purely one-to-one verification

systems do not have. We note that demographic differentials present in one-to-one verifica-

tion algorithms are usually, but not always, present in one-to-many search algorithms. See

Section 7.

One important exception is that some developers supplied identification algorithms for

which false positive differentials are undetectable. Among those is Idemia, who publicly

described how this was achieved [15]. A further algorithm, NEC-3, is on many measures,

the most accurate we have evaluated. Other developers producing algorithms with stable

false positive rates are Aware, Toshiba, Tevian and Real Networks. These algorithms also

give false positive identification rates that are approximately independent of the size of en-

rollment database. See Figure 27.

PRIOR WORK This report is the first to describe demographic differentials for identification algorithms.

There are, however, recent prior tests of verification algorithms whose results comport with

ours regarding demographic differentials between races.

Using four verification algorithms applied to domestic mugshots, the Florida Institute of

Technology and its collaborators showed [23] simultaneously elevated false positives and

reduced false negatives in African Americans vs. Caucasians.

Cavazos et al. [8] applied four verification algorithms to GBU challenge images [32] to

show order-of-magnitude higher false positives in Asians vs. Caucasians. The paper artic-

ulates five lessons related to measurement of demographic effects.

In addition, a recent Department of Homeland Security (DHS) Science and Technology /

SAIC study [20] using a leading commercial algorithm showed that pairing of imposters

by age, sex and race gives false positive rates that are two orders of magnitude higher than

by pairing individuals randomly.

On an approximately monthly schedule starting in 2017, NIST has reported [16] on demo-

graphic effects in one-to-one verification algorithms submitted to the FRVT process. Those

tests employed smaller sets of mugshot and visa photographs than are used here.

Links:

EXEC. SUMMARY

TECH. SUMMARY

False positive: Incorrect association of two subjects 1:1 FMR 1:N FPIR

False negative: Failed association of one subject 1:1 FNMR 1:N FNIR

T 0 → FMR, FPIR → 0

→ FNMR, FNIR → 1

This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8280

2019/12/19 08:14:00 FRVT - FACE RECOGNITION VENDOR TEST - DEMOGRAPHICS 9

WHAT WE DID

NOT DO

This report establishes context, gives results and impacts, and discusses additional research

that can support mitigation of observed deficiencies. It does not address the following:

Training of algorithms: We did not train algorithms. The prototype algorithms submitted

to NIST are fixed and were not refined or adapted. This reflects the usual operational

situation in which face recognition systems are not adapted on customers local data. We

did not attempt, or invite developers to attempt, mitigation of demographic differentials

by retraining the algorithms on image sets maintained at NIST. We simply ran the tests

using algorithms as submitted.

Analyze cause and effect: We did not make efforts to explain the technical reasons for the

observed results, nor to build an inferential model of them. Specifically, we have not tried

to relate recognition errors to skin tone or any other phenotypes evident in faces in our

image sets. We think it likely that modeling will need richer sets of covariates than are

available. In particular, efforts to estimate skin tone and other phenotypes will involve an

algorithm that itself may exhibit demographic differentials.

We did not yet pursue regression approaches due to the volume of data, the number of al-

gorithms tested, and the need to model each recognition algorithms separately, as they are

built and trained independently. Due to their ability to handle imbalanced data, we note,

however, the utiltity of mixed effects models [3, 4, 9] previously developed for explaining

recognition failure. Such approaches can use subject-specific variables (age, sex, race, etc.)

and image-specific variables (contrast, brightness, blur, uniformity, etc.). Models are of-

ten useful, even though it is inevitable that germane quantities will be unavailable to the

analysis.

Consider the effect of cameras: The possible role of the camera, and the subject-camera

interaction, has been detailed recently [9]. This is particularly important when standards-

compliant photography is not possible, or not intended, for example, in high throughput

access control. Without access to human-camera interaction data, we do not report on

quantities like satisfaction, difficulty of use, and failure to enroll. Along these lines, it has

been suggested [41] that NISTs tests using standards-compliant images “don’t translate to

everyday scenarios”.

In fact, we note demographic effects even in high-quality images, notably elevated false

positives. Additionally, we quantify false negatives on a border crossing dataset which is

collected at a different point in the trade space between quality and speed than are our

other three mostly high-quality portrait datasets.

Finally, some governmental organizations dedicated resources to advancing standards so

that the “real-world” images in their applications are high-quality portraits. For example,

the main criminal justice application is supported by the FBI and others being proactive in

the 1990s in establishing portrait capture standards, and then promulgating them.

Use wild images: We did not use image data from the Internet nor from video surveil-

lance. This report does not capture demographic differentials that may occur in such pho-

tographs.

Links:

EXEC. SUMMARY

TECH. SUMMARY

False positive: Incorrect association of two subjects 1:1 FMR 1:N FPIR

False negative: Failed association of one subject 1:1 FNMR 1:N FNIR

T 0 → FMR, FPIR → 0

→ FNMR, FNIR → 1

This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8280

2019/12/19 08:14:00 FRVT - FACE RECOGNITION VENDOR TEST - DEMOGRAPHICS 10

RESEARCH

RECOMMEND-

ATIONS

We now discuss research germane to the quantification, handling and mitigation of demo-

graphic differentials.

Testing: Since 2017 NIST has provided demographic differential data to developers of one-

to-one verification algorithms. Our goal has been to encourage developers to remediate the

effects. While that may have happened in some cases, a prime incentive for a developer when

participating in NIST evaluations is to reduce false negatives rates globally. Going forward,

we plan to start reporting accuracy that pushes developers to produce approximately equal

false positive rates across all demographics.

Mitigation of false positive differentials: With adequate research and development, the

following may prove effective at mitigating demographic differentials with respect to false

positives: Threshold elevation, refined training, more diverse training data, discovery of fea-

tures with greater discriminative power - particularly techniques capable of distinguishing

between twins - and use of face and iris as a combined modality. These are discussed in

section 9. We also discuss, and discount, the idea of user-specific thresholds.

Mitigation of false negative differentials: False negative error rates, and demographic

differentials therein, are reduced in standards-compliant images. This motivates the sug-

gestions of further research into image quality analysis, face-aware cameras and improved

standards-compliance discussed in section 8.

Policy research: The degree to which demographic differentials could be tolerated has never

been formally specified in any biometric application. Any standard directed toward limit-

ing allowable differentials in the automated processing of digitized biological characteristics

might weigh the actual consequences of differentials which are strongly application depen-

dent.

REPORTING OF

DEMOGRAPHIC

EFFECTS

Reporting of demographic effects has been incomplete, in both academic papers and in me-

dia coverage. In particular, accuracy is discussed without specifying, particularly, false posi-

tives or false negatives. We therefore suggest that reports covering demographic differentials

should describe:

The purpose of the system - initial enrollment of individuals into a system, identity verifi-

cation or identification:

The stage at which the differential occurred - at the camera, during quality assessment, in

the detection and feature extraction phase, or during recognition;

The relevant metric: false positive or false negative occurrences during recognition, failures

to enroll, failed detections by the camera, for example;

Any differentials in duration of processes or difficulty in using the system;

Any known information on recognition threshold value, whether the threshold is fixed,

and what the target false positive rate is;

Which demographic group has the elevated failure rates - for example by age, sex, race,

height, or in some intersection thereof; and

Consequences of any error, if known, and procedures for error remediation.

Links:

EXEC. SUMMARY

TECH. SUMMARY

False positive: Incorrect association of two subjects 1:1 FMR 1:N FPIR

False negative: Failed association of one subject 1:1 FNMR 1:N FNIR

T 0 → FMR, FPIR → 0

→ FNMR, FNIR → 1

This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8280

2019/12/19 08:14:00 FRVT - FACE RECOGNITION VENDOR TEST - DEMOGRAPHICS 11

ACKNOWLE-

DGMENTS

The authors are grateful to Yevgeniy Sirotin and John Howard of SAIC at the Maryland Test

Facility, Arun Vemury of DHS S&T, Michael King of Florida Institute of Technology, and John

Campbell of Bion Biometrics for detailed discussions of their work in this area.

The authors are grateful to staff in the NIST Biometrics Research Laboratory for infrastructure

supporting rapid evaluation of algorithms.

DISCLAIMER Specific hardware and software products identified in this report were used in order to per-

form the evaluations described in this document. In no case does identification of any com-

mercial product, trade name, or vendor, imply recommendation or endorsement by the Na-

tional Institute of Standards and Technology, nor does it imply that the products and equip-

ment identified are necessarily the best available for the purpose. Developers participating

in FRVT grant NIST permission to publish evaluation results.

ANNEXES

We supplement this report with more than 1200 pages of charts contained in 17 Annexes

which include exhaustive reporting of results for each algorithm. These are intended to show

the breadth of the effects and to inform the algorithms’ developers. We do not take averages

over algorithms, for example the average increase of false match rate in women, because

averages of samples from different distributions are seldom meaningful (by analogy, taking

the average of temperatures in Montreal and Miami). Applications typically employ just one

algorithm, so averages and indeed any statements purporting to summarize the entirety of

face recognition will not always be correct.

The annexes to this report are listed in Table 1. The first four detail the datasets used in

this report. The remaining annexes contain more than 1200 pages of automatically generated

graphs, usually one for each algorithm evaluated. These are intended to show the breadth of

the effects, and to inform the algorithms’ developers.

# CATEGORY DATASET CONTENT

Annex 1 Datasets Mugshot Description and examples of images and metadata: Mugshots

Annex 2 Datasets Application Description and examples of images and metadata: Application portraits

Annex 3 Datasets Visa Description and examples of images and metadata: Visa portraits

Annex 4 Datasets Border crossing Description and examples of images and metadata: Border crossing photos

Annex 5 Results 1:1 Application False match rates for demographically matched impostors

Annex 6 Results 1:1 Mugshot Cross-race and sex false match rates in United States mugshot images

Annex 7 Results 1:1 Application Cross-race and sex false match rates in worldwide application images

Annex 8 Results 1:1 Application False match rates with matched demographics using application images

Annex 9 Results 1:1 Application Cross-age false match rates with application photos

Annex 10 Results 1:1 Visa Cross age false match rates with visa photos

Annex 11 Results 1:1 Mugshot Cross age and country with application photos

Annex 12 Results 1:1 Mugshot Error tradeoff characteristics with United States mugshots

Annex 13 Results 1:1 Mugshot False negative rates in United States mugshot images by sex and race

Annex 14 Results 1:1 Mugshot False negative rates by country for global application and border crossing photos

Annex 15 Results 1:1 Mugshot Genuine and impostor score distributions for United States mugshots

Annex 16 Results 1:N Mugshot Identification error characteristics by race and sex

Annex 17 Results 1:N Mugshot Candidate list score magnitudes by sex and race

Table 1: Annexes and their content.

Links:

EXEC. SUMMARY

TECH. SUMMARY

False positive: Incorrect association of two subjects 1:1 FMR 1:N FPIR

False negative: Failed association of one subject 1:1 FNMR 1:N FNIR

T 0 → FMR, FPIR → 0

→ FNMR, FNIR → 1

This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8280

2019/12/19 08:14:00 FRVT - FACE RECOGNITION VENDOR TEST - DEMOGRAPHICS 12

TERMS AND DEFINITIONS

The following table defines common terms appearing in this document. A more complete, consistent biomet-

rics vocabulary is available as ISO/IEC 2382 Part 37.

DATA TYPES

Feature vector A vector of real numbers that encodes the identity of a person

Sample One or more images of the face of a person

Similarity score Degree of similarity of two faces in two samples, as rendered by a recognition

algorithm

Template Data produced by face recognition algorithm that includes a feature vector

Threshold Any real number, against which similarity scores are compared to produce a

verification decision

ALGORITHM

COMPONENTS

Face detector Component that finds faces in an image

Comparator Component that compares two templates and produces a similarity score

Searcher Component that searches a database of templates to produce a list of candidates

Template generator Component of a face recognition algorithm that converts a sample into a tem-

plate;

this component implicitly embeds a face detector

ONE-TO-ONE

VERIFICATION

Imposter comparison Comparison of samples from different persons

Genuine comparison Comparison of samples from the same person

False match Incorrect association of two samples from different persons, declared because

similarity score is at or above a threshold

False match rate Proportion of imposter comparisons producing false matches

False non-match Failure to associate two samples from one person, declared because similarity

score is below a threshold

False non-match rate Proportion of genuine comparisons producing false non-matches

Verification The process of comparing two samples to determine if they belong to the same

person or not

ONE-TO-MANY

IDENTIFICA-

TION

Gallery A set of templates, each tagged with an identity label

Consolidated gallery A gallery for which all samples of a person are enrolled under one identifier,

whence N = N

G

Unconsolidated gallery A gallery for which samples of a person are enrolled under different identifiers,

when N < N

G

Identity label Some index or pointer to an identifier for an individual

Identification The process of searching a probe into gallery

Identification decision The assignment either of an identity label or a declaration that a person is not

in the gallery

SYMBOLS

FMR Verification false match rate (measured over comparison of samples)

FNMR Verification false non-match rate (measured over comparison of samples)

FPIR Identification false match rate (measured over comparison of samples)

FNIR Identification false non-match rate (measured over comparison of samples)

N The number of subjects whose faces are enrolled into a gallery

N

G

The number of samples enrolled into a gallery, N

G

≥ N.

N

N M

The number of non-mated searches conducted

N

M

The number of mated searches conducted

Links:

EXEC. SUMMARY

TECH. SUMMARY

False positive: Incorrect association of two subjects 1:1 FMR 1:N FPIR

False negative: Failed association of one subject 1:1 FNMR 1:N FNIR

T 0 → FMR, FPIR → 0

→ FNMR, FNIR → 1

This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8280

2019/12/19 08:14:00 FRVT - FACE RECOGNITION VENDOR TEST - DEMOGRAPHICS 13

Contents

Acknowledgements 11

Disclaimer 11

Terms and definitions 12

1 Introduction 14

2 Prior work 18

3 Performance metrics 20

4 False positive differentials in verification 28

5 False negative differentials in verification 53

6 False negative differentials in identification 61

7 False positive differentials in identification 66

8 Research toward mitigation of false negatives 70

9 Research toward mitigation of false positives 71

Links:

EXEC. SUMMARY

TECH. SUMMARY

False positive: Incorrect association of two subjects 1:1 FMR 1:N FPIR

False negative: Failed association of one subject 1:1 FNMR 1:N FNIR

T 0 → FMR, FPIR → 0

→ FNMR, FNIR → 1

This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8280

2019/12/19 08:14:00 FRVT - FACE RECOGNITION VENDOR TEST - DEMOGRAPHICS 14

Recognition

Verification Identification

FNMR

1:1 False

Rejection

FMR

1:1 False

Accept

⟶ Inconvenience

of recapture

⟶ Security hole

FNIR

1:N “Miss

Rate”

FPIR

1:N “False

Alarm”

⟶ Missed lead

⟶ False lead

⟶ Wasted effort

on others

⟶ Displaces actual lead

Face

Detection,

Localization

+Exposure

-Exposure

Feature

Extraction

Quality

Assessment

Acceptance

Human Review

+ Source: http://www.telegraph.co.uk/technology/2016/12/07/robot-passport-checker-rejects-asian-mans-photo-having-eyes/

+

Same

person

or not?

Image

Capture

Attack

Detection

⟶ False accusation

APCER

Missed PA

BPCER False

Assert of PA

⟶ Security hole

⟶ False accusation

Incorrect

detection

No

detection

⟶ Inconvenience

False Assert

Low Q

Figure 1: The figure is intended to show possible stages in a face recognition pipeline at which demographic differentials

could, in principle, arise. Note that none of these stages necessarily includes algorithms that may be labelled artificial

intelligence, though typically the detection and feature extraction modules are AI-based now.

1 Introduction

Over the last two years there has been expanded coverage of face recognition in the popular press. In some

part this is due to the expanded capability of the algorithms, a larger number of applications, lowered barriers

to algorithm development

1

, and, not least, reports that the technology is somehow biased. This latter aspect

is based on Georgetown [14] and two reports by MIT [5, 36]. The Georgetown work noted prior studies [22]

articulated sources of bias, and described the potential impacts particularly in a policing context, and discussed

policy and regulatory implications. The MIT work did not study face recognition, instead it looked at how well

publicly accessible cloud-based estimation algorithms can determine gender from a single image. The studies

have widely cited as evidence that face recognition is biased.

This stems from a confusion in terminology: Face classification algorithms, of the kind MIT reported on, accept

one face image sample and produce an estimate of age, or sex, or some other property of the subject. Face

recognition algorithms, on the other hand, operate as differential operators: They compare identity information

in features vectors extract from two face image samples and produce a measure of similarity between the two,

which can be used to answer the “question same person or not?”. Face algorithms, both one-to-one identity

verification and one-to-many search algorithms, are built on this differential comparison. The salient point, in

the demographic context, is that one or two people are involved in a comparison and, as we will see, the age,

1

Gains in face recognition performance stem from well-capitalized AI research in industry and academic leading to the development of

convolutional neural networks, and open-source implementations thereof (Caffe, Tensorflow etc.). For face recognition the availability of

large numbers of identity-labeled images (from the web, and in the form of web-curated datasets [VGG2, IJB-C]), and the availability of

ever more powerful GPUs has supported training those networks.

Links:

EXEC. SUMMARY

TECH. SUMMARY

False positive: Incorrect association of two subjects 1:1 FMR 1:N FPIR

False negative: Failed association of one subject 1:1 FNMR 1:N FNIR

T 0 → FMR, FPIR → 0

→ FNMR, FNIR → 1

This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8280

2019/12/19 08:14:00 FRVT - FACE RECOGNITION VENDOR TEST - DEMOGRAPHICS 15

sex, race and other demographic properties of both will be material to the recognition outcome.

The MIT reports nevertheless serve as a cautionary tale in two respects. First, that demographic group member-

ship can have a sizeable effect on algorithms that process face photographs; second, that algorithm capability

varies considerably by developer.

1.1 Potential sources of bias in face recognition systems

Lost in the discussion of bias is specificity on exactly what component of the process is at fault. Accord-

ingly, we introduce Figure 1 to show that a face recognition system is composed of several parts. The figure

shows a notional face recognition pipeline consisting of a capture subsystem, primarily a camera, followed

by a presentation attack detection (PAD) module intended to detect impersonation attempts, a quality accep-

tance (QA) step aimed at checking portrait standard compliance, then the recognition components of feature

extraction and 1:1 or 1:N comparison, the output of which may prompt human involvement. The order of the

components may be different in some systems, for example the QA component may be coupled to the capture

process and would precede PAD. Some components may not exist in some systems, particularly the QA and

PAD functions may not be necessary.

The Figure shows performance metrics, any of which could notionally have a demographic differential. Errors

at one stage will generally have downstream consequences. In a system where subjects make cooperative

presentation to the camera, a person could be rejected in the early stages before recognition itself. For example,

a camera equipped with optics that have too narrow a field of view could produce an image of a tall individual

in which in which the top part of the head was cropped. This could cause rejection at almost any stage and a

system owner would need to determine the origin of errors.

1.2 The role of image quality

Recent research [9] has shown that cameras can have an effect on a generic downstream recognition engine.

A poor image can undermine detection or recognition, and it is possible that certain demographics yield pho-

tographs ill-suited to face recognition e.g. young children [28], or very tall individuals. As pointed out above

there is potential for demographic differentials to appear at the capture stage, that is when only a single im-

age is being collected before any comparison with other images. Demographic differentials that occur during

collection could arise from (at least) inadequacies of the camera, from the environment or “stage”, and from

client-side detection or quality assessment algorithms. Note that manifestly poor (and unrecognizable) images

can be collected from mis-configured cameras, without any algorithmic or AI culpability. Indeed, after publica-

tion of the MIT studies [5,36] on bias in gender-estimation algorithms, suspicion fell upon the presence of poor

Links:

EXEC. SUMMARY

TECH. SUMMARY

False positive: Incorrect association of two subjects 1:1 FMR 1:N FPIR

False negative: Failed association of one subject 1:1 FNMR 1:N FNIR

T 0 → FMR, FPIR → 0

→ FNMR, FNIR → 1

This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8280

2019/12/19 08:14:00 FRVT - FACE RECOGNITION VENDOR TEST - DEMOGRAPHICS 16

photographs, due to under-exposure of dark-skinned individuals in that dataset. An IBM gender estimation

algorithm had been faulted in the MIT study; in response, and previously, IBM has been active in addressing

AI bias. Relevant here is that it produced a better algorithm

2

, and examined whether skin tone itself drove

gender classification accuracy [30, 31] - in short, “skin type by itself has a minimal effect on the classification

decision”.

False negatives occur in biometric systems when samples from one individual yield a comparison score below

a threshold. This will occur when the features extracted from two input photographs are insufficiently similar.

Recall that face recognition is implemented as a differential operator: two samples are analyzed and compared.

So a false negative occurs when two from the same face appear different to the algorithm.

It is very common to attribute false negatives to factors such as pose, illumination and expression so much so

that dedicated databases have been built up to support development of algorithms with immunity to such

3

.

Invariance to such “nuisance” factors has been the focus of the bulk of face recognition research for more two

decades. Indeed over the last five years there have been great advances in this respect due to the adoption

of deep convolutional neural networks which demonstrate remarkable tolerance to very sub-standard pho-

tographs i.e. those that deviate from formal portrait standards most prominently ISO/IEC 39794-5 and its

law-enforcement equivalent ANSI/NIST ITL 1-2017.

However, here we need to distinguish between factors that are expected to affect one photo in a mated pair -

due to poor photography (e.g. mis-focus), poor illumination (e.g. too dark), and poor presentation (e.g. head

down) - and those that would affect both photographs over time, potentially including properties related to

demographics.

1.3 Photographic Standards

In the late 1990s the FBI asked NIST to establish photographic best-practices for mugshot collection

4

. This

was done to guide primarily state and local police departments in the capture of photographs that would

support forensic (i.e. human) review. It occurred more than a decade before the FBI deployed automated face

recognition. That standardization work was conducted in anticipation of digital cameras

5

being available to

replace film cameras that had been used for almost a century. The standardization work included consideration

of cameras, lights and geometry

6

. There was explicit consideration of the need to capture images of both dark

and light skinned individuals, it being understood that it is relatively easy to produce photographs for which

2

See Mitigating Bias in AI Models.

3

The famous PIE databases, for example.

4

Early documents, such as Best Practice Recommendation for the Capture of Mugshots, 1999, seeded later formal standardization of

ISO/IEC 19794-5.

5

See NIST Interagency Report 6322, 1999.

6

See this overview.

Links:

EXEC. SUMMARY

TECH. SUMMARY

False positive: Incorrect association of two subjects 1:1 FMR 1:N FPIR

False negative: Failed association of one subject 1:1 FNMR 1:N FNIR

T 0 → FMR, FPIR → 0

→ FNMR, FNIR → 1

This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8280

2019/12/19 08:14:00 FRVT - FACE RECOGNITION VENDOR TEST - DEMOGRAPHICS 17

large areas of dark or bright pixels can render detection of anatomical features impossible.

Face recognition proceeds as a differential operation on features extracted from two photographs. Accuracy

can be undermined by poor photography/illumination/presentation and by differences in those i.e. any

change in the digital facial appearance. Of course an egregiously underexposed photograph will have in-

sufficient information content, but two photographs taken with even moderately poor exposure can match,

and leading contemporary algorithms are highly tolerant of quality degradations.

1.4 Age and ageing

Ageing will change appearance over decades and will ultimately undermine automated face recognition

7

. In

the current study, we don’t consider ageing to be a demographic factor because it is a slow, more-or-less grace-

ful, process that happens to all of us. However, there is at least one demographic group that ages more quickly

than others - children - who are disadvantaged in many automated border control systems either by being

excluded by policy, or by encountering higher false negatives. Age itself is a demographic factor as accuracy

in the elderly and the young differ for face recognition (usually) and also for fingerprint authentication. This

applies even without significant time lapse between two photographs.

Clearly injury or disease can change appearance on very short timescales, so such factors should be excluded,

when possible, from studies dedicated to detection of broad demographic effects. Development of equipment

and algorithms, and studies thereof, that are dedicated to the inclusive use of biometrics are valuable of course

- for example recognition of photosensitive subjects wearing sunglasses, or finger amputees presenting finger-

prints.

7

See recent results for verification algorithms in the FRVT reports, and for identification algorithms in NIST Interagency Report 8271 [17].

For a formal longitudinal analysis of ageing, using mixed-effects models, see Best-Rowden [3].

Links:

EXEC. SUMMARY

TECH. SUMMARY

False positive: Incorrect association of two subjects 1:1 FMR 1:N FPIR

False negative: Failed association of one subject 1:1 FNMR 1:N FNIR

T 0 → FMR, FPIR → 0

→ FNMR, FNIR → 1

This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8280

2019/12/19 08:14:00 FRVT - FACE RECOGNITION VENDOR TEST - DEMOGRAPHICS 18

# SOURCE IMAGE NUMBER OF DISCUSSION

SUBJECTS IMAGES

1 Cavazos et

al. [8] at UT

Dallas

Notre Dame

GBU [32]

portraits

389 <1085 The study showed order-of-magnitude elevations in false positive rates in

university volunteer Asian vs. Caucasian faces. The study reported FMR(T).

As the study showed neither FNMR(T) nor linked error tradeoff

characteristics the false negative differential is not apparent. It discusses the

effect of “yoking” i.e the pairing of imposters by sex and race. It deprecates

area-under-the-curve (AUC). The study used two related algorithms from

the University of Maryland, one open-source algorithm [38], and one older

inaccurate pre-DCNN algorithm.

2 Krishnapriya

et al. [23] at

Florida

Inst. Tech

Operational

mugshots:

Morph

db [37]

10 350

African

Am. +

2 769

Cau-

casians

42 620

African

Am. +

10 611

Cau-

casians

The study reported: order-of-magnitude elevated false positives in African

Americans vs. Caucasians; lower false negative rates in African Americans;

and reduced differentials in higher quality images [23,24]. That study used

three open-source algorithms, and one commercial algorithm. Two of the

open-source algorithms are quite inaccurate and not representative of

commercial deployment. Importantly, the study also noted the inadequacies

of error tradeoff characteristics for documenting fixed-threshold

demographic differentials.

3 Howard et

al. [20] at

SAIC/MdTF

with DHS

S&T

Lab

collected,

adult

volunteers [9]

363 - The study establish useful definitions for “differential performance“ and

“differential outcome” and for broad and narrow heterogeneity of imposter

distributions. It showed order-of-magnitude variation in false positive rates

with age, sex and race, establishing an information gain approach to

formally ordering their effect. The study employed images from 11 capture

devices, and applied one leading commercial verification algorithm.

Table 2: Prior studies.

2 Prior work

All prior work relates to one-to-one verification algorithms. This report, in contrast, includes results for many

recent, mostly commercial, algorithms implementing both verification and identification.

Except as detailed below, this report is the first to properly report and distinguish between false positive and

false negative effects, something that is often missing in other reports.

The broad effects given in this report concerning age and sex have been known as far back as 2003 [35]. Since

2017, our ongoing FRVT report [16] has reported large false positive differential across sex, age and race.

Tables 2 and 3 summarize recent work in demographic effects in automated face recognition.

Links:

EXEC. SUMMARY

TECH. SUMMARY

False positive: Incorrect association of two subjects 1:1 FMR 1:N FPIR

False negative: Failed association of one subject 1:1 FNMR 1:N FNIR

T 0 → FMR, FPIR → 0

→ FNMR, FNIR → 1

This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8280

2019/12/19 08:14:00 FRVT - FACE RECOGNITION VENDOR TEST - DEMOGRAPHICS 19

# SOURCE IMAGE NUMBER OF DISCUSSION

SUBJECTS IMAGES

4 Cook et

al. [9] at

SAIC/MdTF

with DHS

S&T

Lab

collected,

adult

volunteers

525 The study deployed mixed-effects regression models to examine

dependence of genuine similarity scores on sex, age, height, eyewear, skin

reflectance and on capture device. The report displayed markedly different

images of the same people from different capture devices, showing potential

for the camera to induce demographic differential performance. The study

found lower similarity scores in those identifying as Black or African

American, comporting with [22] but contrary to the best ageing study [3].

The study also showed that comparison of samples collected on the same

day have different demographic differentials than those collected up to four

years apart, in particular that women give lower genuine scores than men

with time separation. Same-day biometrics are useful for short-term

recognition applications like transit through an airport.

5 El Khiyari

et al. [13]

Operational

mugshots:

Morph

db [37]

724 adult,

balanced

on race +

sex

2896 =

1448 each

African

Am. +

Cau-

casians,

balanced

on sex

The paper used a subset of the MORPH database with two algorithms( [38],

modified and one COTS) to show better verification error rates in the men,

the elderly, and in whites. The study should be discounted for two reasons:

First the algorithms give high error rates at very modest false match rates:

the best FNMR = 0.06 at FMR = 0.01. Second the paper reports FNMR at

fixed FMR, not at fixed thresholds thereby burying FMR differentials.

Moreover, the paper does not disclose how imposters were paired e.g.

randomly or, say, with same age, race, and sex.

Table 3: Prior studies (continued).

Links:

EXEC. SUMMARY

TECH. SUMMARY

False positive: Incorrect association of two subjects 1:1 FMR 1:N FPIR

False negative: Failed association of one subject 1:1 FNMR 1:N FNIR

T 0 → FMR, FPIR → 0

→ FNMR, FNIR → 1

This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8280

2019/12/19 08:14:00 FRVT - FACE RECOGNITION VENDOR TEST - DEMOGRAPHICS 20

3 Performance metrics

Both verification and identification systems generally commit two kinds of errors, the so-called Type I error

where an individual is incorrectly associated with another, and Type II where the individual is incorrectly not

associated with themselves.

The ISO/IEC 19795-1 performance testing and reporting standard requires different metrics to be reported

for identification and verification implementations. Accordingly the following subsections define the formal

metrics used throughout this document.

3.1 Verification metrics

Verification accuracy is estimated by forming two sets of scores: Genuine scores are produced from mated

pairs; imposter scores are produced from non-mated pairs. These comparisons should be done in random

order so that the algorithm under test cannot infer that a comparison is mated or not.

From a vector of N genuine scores, u, the false non-match rate (FNMR) is computed as the proportion below

some threshold, T:

FNMR(T ) = 1 −

1

N

N

X

i=1

H(u

i

− T ) (1)

where H(x) is the unit step function, and H(0) taken to be 1.

Similarly, given a vector of M imposter scores, v, the false match rate (FMR) is computed as the proportion

above T:

FMR(T ) =

1

M

M

X

i=1

H(v

i

− T ) (2)

The threshold, T, can take on any value. We typically generate a set of thresholds from quantiles of the observed

imposter scores, v, as follows. Given some interesting false match rate range, [FMR

L

, FMR

U

], we form a vector

of K thresholds corresponding to FMR measurements evenly spaced on a logarithmic scale. This supports

plotting of FMR on a logarithmic axis. This is done because typical operations target false match rates spanning

several decades 10

−6

to as high as 10

−2

.

T

k

= Q

v

(1 − FMR

k

) (3)

where Q

v

is the quantile function, and FMR

k

comes from

log

10

FMR

k

= log

10

FMR

L

+

k

K

[log

10

FMR

U

− log

10

FMR

L

] (4)

Links:

EXEC. SUMMARY

TECH. SUMMARY

False positive: Incorrect association of two subjects 1:1 FMR 1:N FPIR

False negative: Failed association of one subject 1:1 FNMR 1:N FNIR

T 0 → FMR, FPIR → 0

→ FNMR, FNIR → 1

This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8280

2019/12/19 08:14:00 FRVT - FACE RECOGNITION VENDOR TEST - DEMOGRAPHICS 21

Error tradeoff characteristics are plots of FNMR(T) vs. FMR(T). These are plotted with FMR

U

→ 1 and FMR

L

as low as is sustained by the number of imposter comparisons, M. This should be somewhat higher than the

“rule of three” limit 3/N because samples are generally not independent due to the use of the same image in

multiple comparisons.

3.2 Identification metrics

Identification accuracy is estimated from two sets of candidate lists: First, a set of candidate lists obtained from

mated-searches; second, a set from non-mated searches. These searches should not be conducted by randomly

ordering mated and non-mated searches so that the algorithm under test cannot infer that a search has a mate

or not. Tests of open-set biometric identification algorithms must quantify frequency of two error conditions:

False positives: Type I errors occur when search data from a person who has never been seen before is

incorrectly associated with one or more enrollees’ data.

Misses: Type II errors arise when a search of an enrolled person’s biometric does not return the correct

identity.

Many practitioners prefer to talk about “hit rates” instead of “miss rates” - the first is simply one minus the

other as detailed below. Sections 3.2.1 and 3.2.2 define metrics for the Type I and Type II performance variables.

Additionally, because recognition algorithms sometimes fail to produce a template from an image, or fail to

execute a one-to-many search, the occurrence of such events must be recorded. Further because algorithms

might elect to not produce a template from, for example, a poor quality image, these failure rates must be

combined with the recognition error rates to support algorithm comparison. This is addressed in section 3.4.

3.2.1 Quantifying false positives

It is typical for a search to be conducted into an enrolled population of N identities, and for the algorithm

to be configured to return the closest L candidate identities. These candidates are ranked by their score, in

descending order, with all scores required to be greater than or equal to zero. A human analyst might examine

either all L candidates, or just the top R ≤ L identities, or only those with score greater than threshold, T.

From the candidate lists, we compute false positive identification rate as the proportion of non-mate searches

that erroneously return candidates:

FPIR(N, T ) =

Num. non-mate searches with one or more candidates returned with score at or above threshold

Num. non-mate searches attempted.

(5)

Links:

EXEC. SUMMARY

TECH. SUMMARY

False positive: Incorrect association of two subjects 1:1 FMR 1:N FPIR

False negative: Failed association of one subject 1:1 FNMR 1:N FNIR

T 0 → FMR, FPIR → 0

→ FNMR, FNIR → 1

This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8280

2019/12/19 08:14:00 FRVT - FACE RECOGNITION VENDOR TEST - DEMOGRAPHICS 22

Under this definition, FPIR can be computed from the highest non-mate candidate produced in a search - it

is not necessary to consider candidates at rank 2 and above. An alternative quantity, selectivity, accounts for

multiple candidates above threshold - see [17].

3.2.2 Quantifying hits and misses

If L candidates are returned in a search, a shorter candidate list can be prepared by taking the top R ≤ L candi-

dates for which the score is above some threshold, T ≥ 0. This reduction of the candidate list is done because

thresholds may be applied, and only short lists might be reviewed (according to policy or labor availability, for

example). It is useful then to state accuracy in terms of R and T , so we define a “miss rate” with the general

name false negative identification rate (FNIR), as follows:

FNIR(N, R, T ) =

Num. mate searches with enrolled mate found outside top R ranks or score below threshold

Num. mate searches attempted.

(6)

This formulation is simple for evaluation in that it does not distinguish between causes of misses. Thus a mate

that is not reported on a candidate list is treated the same as a miss arising from face finding failure, algorithm

intolerance of poor quality, or software crashes. Thus if the algorithm fails to produce a candidate list, either

because the search failed, or because a search template was not made, the result is regarded as a miss, adding

to FNIR.

Hit rates, and true positive identification rates: While FNIR states the “miss rate” as how often the correct candidate

is either not above threshold or not at good rank, many communities prefer to talk of “hit rates”. This is simply

the true positive identification rate(TPIR) which is the complement of FNIR giving a positive statement of how

often mated searches are successful:

TPIR(N, R, T ) = 1 − FNIR(N, R, T ) (7)

This report does not report true positive “hit” rates, preferring false negative miss rates for two reasons. First,

costs rise linearly with error rates. For example, if we double FNIR in an access control system, then we

double user inconvenience and delay. If we express that as decrease of TPIR from, say 98.5% to 97%, then we

mentally have to invert the scale to see a doubling in costs. More subtly, readers don’t perceive differences

in numbers near 100% well, becoming inured to the “high nineties” effect where numbers close to 100 are

perceived indifferently.

Reliability is a corresponding term, typically being identical to TPIR, and often cited in automated (fingerprint)

identification system (AFIS) evaluations.

Links:

EXEC. SUMMARY

TECH. SUMMARY

False positive: Incorrect association of two subjects 1:1 FMR 1:N FPIR

False negative: Failed association of one subject 1:1 FNMR 1:N FNIR

T 0 → FMR, FPIR → 0

→ FNMR, FNIR → 1

This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8280

2019/12/19 08:14:00 FRVT - FACE RECOGNITION VENDOR TEST - DEMOGRAPHICS 23

An important special case is the cumulative match characteristic(CMC) which summarizes accuracy of mated-

searches only. It ignores similarity scores by relaxing the threshold requirement, and just reports the fraction

of mated searches returning the mate at rank R or better.

CMC(N, R) = 1 − FNIR(N, R, 0) (8)

We primarily cite the complement of this quantity, FNIR(N, R, 0), the fraction of mates not in the top R ranks.

The rank one hit rate is the fraction of mated searches yielding the correct candidate at best rank, i.e. CMC(N,

1). While this quantity is the most common summary indicator of an algorithm’s efficacy, it is not dependent

on similarity scores, so it does not distinguish between strong (high scoring) and weak hits. It also ignores that

an adjudicating reviewer is often willing to look at many candidates.

3.3 DET interpretation